AI may or may not break the Internet; it’s definitely set to blow our precious computing ration

There’s a lot of chat about ChatGPT and AI, nearly all of which ignores the same inconvenient truth we’ve ignored for half a century – its carbon cost. Air, water and land are finite resources, and pretending otherwise comes at a devastating carbon cost. Why do we still think of computing as being carbon cost-free?

No Planet B

Even ostriches with their feathers in their ears singing la-la-la, heads in the sand, are finding it hard to ignore the reality that resources are finite. Deep down, we all know our long-term survival depends on reverting to a sustainable lifestyle. Just not yet.

If there are too many of us, and we’re so profligate, sooner or later (in fact, already) there are consequences:

- Wells do run dry.

- The marine ecosystem can no longer adapt to ocean acidification.

- Flood defences fail to keep up with rapidly changing weather patterns.

- The sea can’t absorb all our rubbish.

- The oil will run out.

Ever-optimistic, we allow ourselves to be seduced by magic-bullet solutions. We’re particularly beguiled by those that don’t require us to do, or change, anything:

- nuclear fusion

- electric aircraft

- carbon capture

- giant space blankets

Anyone paying attention knows time’s up for such future solutions, even the feasible ones.

We’re currently not doing anything near near enough. Our carbon mitigations are modest, not even keeping pace with growing demand. The ‘good’ news is that every day more people are getting richer. The bad news is that they want to emulate the carbon-intensive lifestyles the rich are clinging to.

So any progress is cancelled out by new demands for energy, and our carbon emissions keep ticking up. The clock’s ticking too.

Trackside, the coaches are pointing to their stopwatches in increasing desperation, while we’re not even running fast enough to stand still, and still going backwards.

- America and Europe are not only approving new oil and gas exploration, but still subsidising them.

- China leads the world in transitioning to renewable energy, but while still consuming more coal than ever.

- As they get richer, Global South countries want to enjoy the same rewards, choices and lifestyles they see in the Global North.

These problems are challenging enough, you might imagine we’d be doing our utmost not to adde to them. But AI, our latest technological breakthrough, has done just that.

AI’s Dirty Secret

We’re written elsewhere about the Internet’s dirty secret, how it quietly pumps out twice as much carbon emissions as aviation, which takes all the headline hits.

In that article, we honed in on the main contributor, the data centres. Misleadingly called ‘cloud servers’, these are very much earthbound, and consume vast amounts of energy just to keep them cool, even when idle.

Each huge, hidden data centre requires as much power as a small town, most of which comes from fossil fuels. Our Silicon Valley Overlords dream of virtual ‘metaverses’, but their ‘virtual’ worlds of ones and zeroes have immediate real-world consequences, for us flesh and blood citizens.

Drawing attention to this truth would be highly inconvenient, and bad for business. Big Business still trumps The Environment, every time, so things are getting worse.

Carbon-hungry platforms like crypto, Facebook, YouTube, Netflix and Zoom are bad enough, but now we’ve opened up a whole new way of turbocharging demand.

And we’re not even talking about it.

Listen to the Experts

That’s not quite fair, supercomputing experts are talking about it. They’re facing up to the carbon facts, and they’re panicking.

Not all tech people are blind to tech’s carbon cost, and those that know most about it are already appalled at the projections.

While the SVOs, bean-counters, venture capitalists and stock-pickers are salivating over the latest AI/Machine Learning Gold Rush, the engineers who build the machines and systems that make it possible have been doing the maths.

It’s always worth eavesdropping in on such conversations. Semiconductor Engineering (strapline: ‘Deep Insights for the Tech Industry’) is a forum for such boffin-level discussions.

In the summer of 2022, as the AI tsunami was breaking, it published an article headlined ‘AI Power Consumption Exploding’. It gets straight to the point:

Machine learning is on track to consume all the energy being supplied, a model that is costly, inefficient, and unsustainable. To a large extent, this is because the field is new, exciting, and rapidly growing. It is being designed to break new ground in terms of accuracy or capability. Today, that means bigger models and larger training sets, which require exponential increases in processing capability and the consumption of vast amounts of power in data centers for both training and inference. In addition, smart devices are beginning to show up everywhere. But the collective power numbers are beginning to scare people.

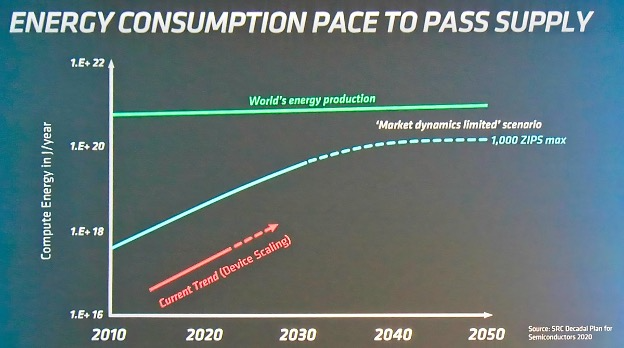

Quite a start, for a technical publication. Then comes a slide from a recent Design Automation Conference, prepared by the Chief Technology Officer of leading chip designer Advanced Micro Devices (AMD).

Mark Papermaster revealed triggered deep unease in the high-performance computing community.

Here’s the slide – ignore the unfamiliar units of measurement and it’s a pretty basic graph. Which is what makes it so scary.

Fig. 1: Energy consumption of ML. Source: AMD

For the layman, the article requires some jargon-busting, but once you’ve paraphrased geek-speak like ‘hyperscaling’, ‘neural networks’ and ‘NLP’, what they’re saying is:

We’ve just invented a massive new carbon-guzzling technology. If we don’t control, limit, or somehow constrain its growth, it will make things much worse much quicker. We need to ration it like there’s a war on.

AI, 21st century internal combustion engine

The Brave New World of AI, Large Language Models and Generative Pre-trained Transformers isn’t so new after all. Like the Industrial Revolution, the Digital Revolution exponentially consumes finite resources.

For good and ill, AI, LLMs and GPT are the digital equivalent of the Internal Combustion Engine. Like the steam engine when it out-pulled a hundred horses, AI is making science fiction become reality overnight. Once again, new vistas of possibility, stretching to the horizon, are being revealed.

And like the internal combustion engine, something has to fuel those dreams. And that fuel isn’t sustainable.

Computing, like oil, or water, is a finite resource. It’s not just the energy demanded by the data centres – there are also the resources required to manufacture the components and store the output.

But if we can’t fix the power issue, the rest hardly matters.

A convention of (Dr.) Frankensteins

In case you thought Mark Papermaster may have been a panicky outlier, here are some other quotes from supercomputing experts, bosses and thought leaders from the same article.

There are billions of devices that make up the IoT [Internet of Things], and at some point in the not-too-distant future, they are going to use more power than we generate in the world.

Marcie Weinstein, Director of Strategic and Technical Marketing, Aspinity

Our process technology is approaching the limits of physics… From the technology side, I’m not really giving us a good chance. Yet there is this proof of concept that consumes about 20 watts and does all of these things, including learning. That’s called the brain.

Michael Frank, System Architect. Arteris IP

We have forgotten that the driver of innovation for the last 100 years has been efficiency. That is what drove Moore’s Law. We are now in an age of anti-efficiency.

Steve Teig, CEO, Perceive

If you look at the amount of energy taken to train a model two years back, they were in the range of 27 kilowatt hours for some of the transformer models,. If you look at the transformers today, it is more than half a million kilowatt hours. At the end of the day, what it boils down to is the carbon footprint.

Godwin Maben, Scientist, Synopsys

He or she who has the brains to understand should have the heart to help.

Aart de Geus, Chairman & CEO, Synopsys,

Cumulatively, it’s hard not to think of the ‘Godfather of AI’ Geoffrey Hinton, who quit Google to speak out about the dangers of the tech he created.

It’s sobering to hear so many people who’ve devoted their careers to computing suddenly questioning their life’s work. Hard not to think of Mary Shelley’s fictional archetype of scientific regret.

As the memory of past misfortunes pressed upon me, I began to reflect upon their cause—the monster whom I had created, the miserable daemon whom I had sent abroad into the world.

Dr. Victor Frankenstein.

Before we all give up, and retreat, like Frankenstein’s monster, to what’s left of the Arctic, here’s one last quote.

It contains some hope – but note that it requires non-scientists to do something other than passively watch as our SVOs play out their AI Wars.

There is some commercial pressure to reduce the carbon impact of these companies, not direct monetary, but more that the consumer will only accept a carbon-neutral solution. This is the pressure from the green energy side, and if one of these vendors were to say they are carbon neutral, more people will be likely to use them.

Alexander Wakefield, Scientist, Synopsys

So saving the day is up to ‘the consumer’.

Just to be clear, that means us.

The inspiring case of Dr. Daniel and the Yellow Dog

We recently published our review of the Pilot See Through Carbon Competition.

It relates what a recently-founded, zero-budget volunteer network with the Goal of Speeding Up Carbon Drawdown by Helping the Inactive Become Active did with an unsolicited gift of a voucher for supercomputer-grade cloud computing, with an expiry date 5 months away, worth US$500,000.

The network was social media network See Through News, and the donor cloud computing management platform YellowDog.

The review reveals how after teaming up with another zero-budget network of Global South researchers, the Compeition found 4 winners, only two of whom were able to complete the compute before the deadline.

It’s a seat-of-the-pants thriller, and we’re trying to avoid plot spoilers. For the purposes of this article, all you need to know is that one of the two winners who made it under the wire was:

- Dr. Daniel Zepeda Rivas, a Mexican architect and engineer who’d spent years accumulating a vast trove of data on the carbon intensity of buildings around the world, and weather data on how future buildings will need to not only be zero-carbon, but withstand the new weather extremes we’ve created.

At the time of publication, the review lacked one bit of information. Now we have it, it turns out to be extremely pertinent to the issue at hand.

It may also provide the spark of hope that this awesome new technology may become part of our carbon solution, rather than contributing to the problem.

That missing link was how much of the $500,000 prize Daniel and Johnson’s projects had consumed.

And – more relevant to this article – the carbon cost of the compute.

Which is more eco-friendly – sunny Arizona, gay Paree, or grey London?

YellowDog has now crunched its own numbers on the carbon-reduction element of Daniel’s task. Daniel had gathered a huge amount of data, and performed complex calculations in many combinations. It was what YellowDog’s Tech Team called ‘a good fit’, a worthy professional challenge, made even more intriguing by the rapidly-looming deadline.

With the data crunched, the admin numbers for the compute are now in. They’re fascinating, chilling, and inspiring, but require a little background to fully understand why.

As we explain in our article on the carbon cost of computing, YellowDog is to cloud computing what a mortgage broker is to banking.

A broker has no money to lend, but is expert at finding the right mortgage for your individual needs from third parties. You could do all the legwork yourself, calling round every possible mortgage lender and going through all their terms and condition, In practice no one does, because:

a) it’s far too complicated and we’re not experts

b) the experts have access to bulk discounts unavailable to the rest of us

c) the experts monitor way more options than any of us could ever access

Likewise, YellowDog owns no servers itself, but is expert at finding the best deal for any client, to suit their particular needs.

What distinguishes YellowDog is how they quote for a job. Cloud computing management platforms, whether brokers, or actual suppliers like Amazon Web Services, Google, Microsoft Azure, Oracle or Alibaba, give you these choices:

- If you’re in a hurry, the fastest option

- If you want to save money, the cheapest option

What makes YellowDog unique is their 3rd menu choice:

- If you want to reduce carbon, the lowest-carbon option

Incidentally, low carbon is increasingly inseparable from low cost. Calculating this is particularly complicated for YellowDog, because of their other superpower. They do ‘distributed computing’, i.e. seamlessly switching computing tasks from one data centre to another, even on the other side of the world, on the fly.

This makes carbon consumption calculations even tricker, requiring much advanced mathematics. Their current model uses a few placeholder average values while they work out how to deliver perfect real-time data, but, even so, the numbers are remarkable.

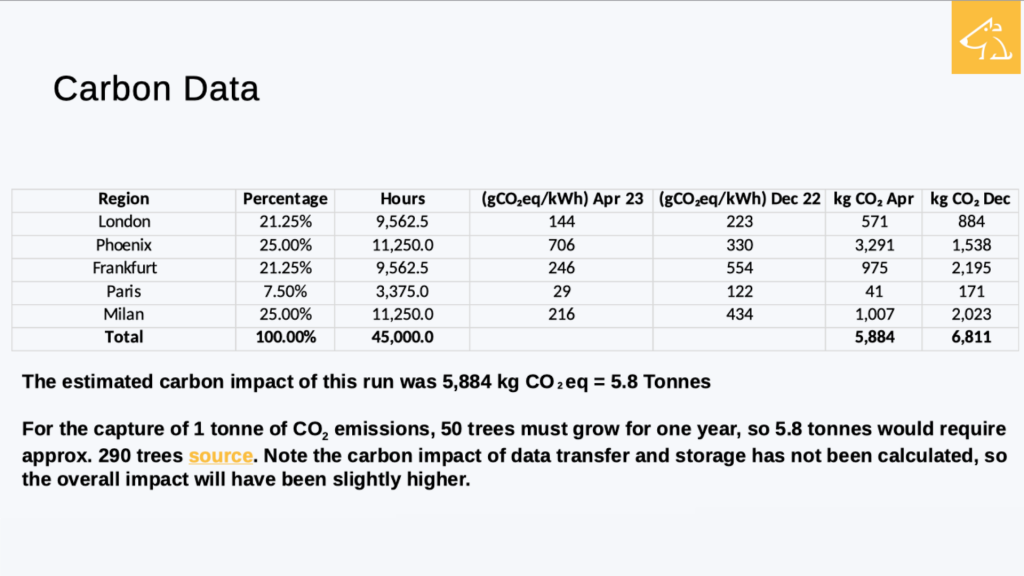

Consider this chart, showing the different locations where Daniel’s epic data run was computed. YellowDog tried to optimise for low-carbon, but the size of the Daniel’s dataset (37.5TB), the complexity of the calculations (45,000 hours) and the imminence of the compute (10 days for the whole shebang) meant they had to get less fussy as the deadline approached.

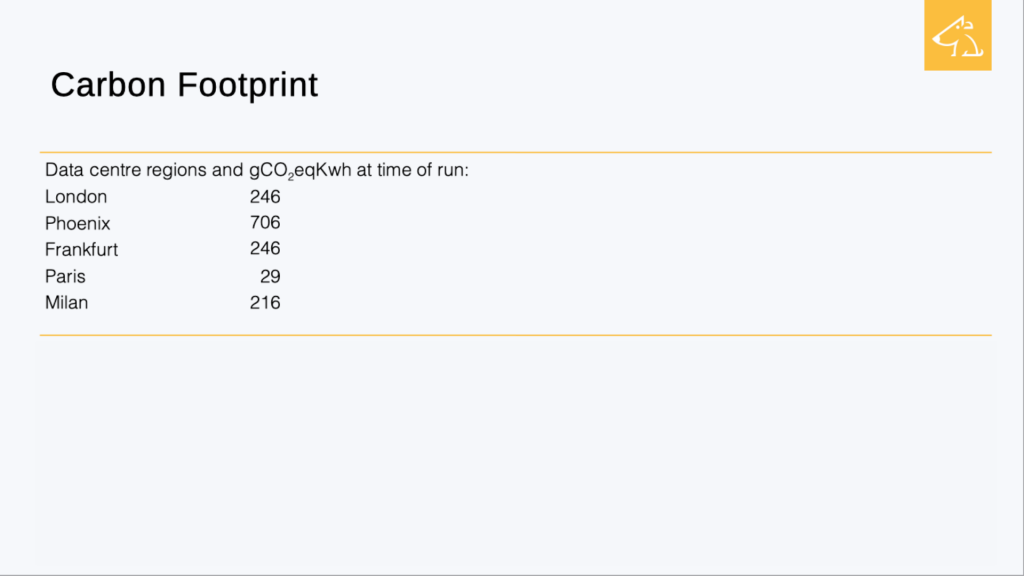

So here’s YellowDog’s graphic – don’t worry about the metric – we’ll shortly use whale testicles to explain what ‘gCO2eq/kWh’ means.

Just know that the bigger the number, the more carbon was emitted in Daniel’s compute…

Would you have guessed that crunching numbers is nearly 3 times more polluting in Phoenix than in London, and 24 times more than Paris?

This is because:

- 62% of Arizona’s electricity comes from coal and gas

- The UK power grid has a diverse and dynamic energy mix, easily cherry-picked for renewable?

- 75% of France’s energy is nuclear, (the French figure doesn’t account for the carbon costs of decommissioning nuclear power stations, but let’s park that for now)

This carbon figure reflects the average energy mix of each location at the time of the compute run (April 2023), optimised for carbon reduction within the constraints of the deadline.

If, like YellowDog, you really want to dive into the weeds, the time of year can also make a big difference.

These figures are monthly averages, hence approximations, but still tell an amazingly granular story. YellowDog is working on using real-time energy mix data to make these numbers even more accurate.

But what do these numbers mean? What on earth is a ‘gCO2eq/kWh’ when it’s at home?

To explain, we’re going to have to talk bollocks.

Serious bollocks.

Whale bollocks.

1 Right Whale Testicle v. 1 Female Sperm Whale

Some more jargon-busting:

- The ‘g’ in gCO2eq/kWh stands for ‘gramme’, as in 1/1,000 of a kilogramme

- CO2eq means ‘carbon dioxide equivalent’

- kWh is ‘kiloWatt hour’, a standard unit of power consumption (a kettle draws 2-3 kiloWatts, so 1 Kw/h = the amount of electricity it takes to boil water for an 20-30 minutes)

So the unit being measured is the amount of carbon released into the atmosphere as the result of Daniel’s compute.

Each gramme contributes to more global heating, and continues to do so for centuries.

That last chart shows the total carbon cost of Daniel’s compute – 5,884kg (it would have been 6,800kg had they done exactly the same thing in December).

But what does that number mean?

Most of us have trouble visualising big numbers, and See Through News spends a lot of time trying to find effective ways to do so (here’s our attempt to describe a gigatonne, smuggled into a school Languages Day speech).

5,884kg = 5.8 metric tonnes.

That’s about as much as an adult elephant.

But YellowDog had to shift the computation to more carbon-intensive locations to complete Daniel’s huge compute as the deadline loomed, hence the multiple locations in Paris, Frankfurt, London, Milan and Phoenix.

Without the deadline, they could have run the entire compute in Parisian data centres. This would have generated 541kg of CO2.

That’s about as much as a single testicle of a Right whale.

If they’d done the whole run in Phoenix, it would have generated 13,165kg of CO2.

That’s about as much as an entire female Sperm whale.

So what?

YellowDog’s granular, forensic analysis of the carbon cost is what we should be doing with everything, all the time. We should treat any additional carbon emission as a precious, finite commodity, and deploy it sparingly, and with great deliberation.

To reach a sustainable future before we screw Planet A up too disastrously, we need to start this parsimonious approach yesterday.

Emitting carbon should be seen as a necessary, temporary evil, while we rush to find sustainable substitutes.

We should treat anything involving carbon emissions like gold, diamonds, caviar – anything we’re used to valuing highly. But we’re still treating it like it’s nothing.

Cloud computing currently does involve some carbon emissions. Even in the best-case scenario, Daniel’s would have cost a whale swinger.

But here’s the point. The purpose of Daniel’s compute was to generate original data that can immediately be put to practical use on the ground, around the world, to measurably reduce carbon.

- Not to generate Fake News

- Generate AI images of the Pope in a Ballenciaga coat

- Play games.

- Or any of the other million and one trivial ways we’re currently using our valuable, finite computing resource

That’s why, like that computer engineer in the Semiconductor Engineering article said, ultimately we, as ‘consumers’, need to decide how much we’re going to consume, and how we eke out our remaining carbon rations.

One final detail – how much of the US$500,000 prize did Daniel’s compute take up?

We know, but we’re not going to tell you.

It’s about time we started counting carbon, instead of dollars.