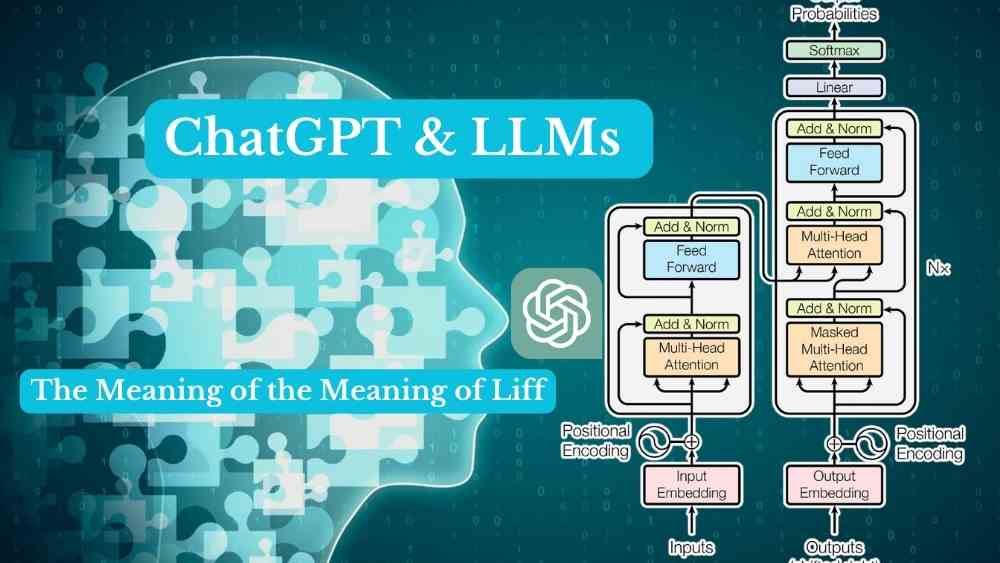

What a 40-year-old joke book can ‘teach’ us about explaining new science like Large Language Models to ordinary people

You’re likely seeing loads of articles about ChatGPT and LLMs (Large Language Models). Some may leave you stunned at how clever algorithms are getting, others terrified the robots are taking over the world sooner than expected.

Most leave you confused about what to think. This is because so few articles about LLMs spend such time explaining the technology behind tech like Microsoft’s Bing, Meta’s Galactica and Google’s LaMDA.

There are honourable exceptions. This deep dive from The Intelligencer features one of ChatGPT’s most vocal and expert critics, and is one of the best explainers out there, if you’re prepared to give it the time. Most ChatGPT articles, however, are clickbait crammed into Good Robot/Bad Robot frames. Entertaining and eye-catching, but lacking substance and insight.

This may be because the editors think it will take too long, is too complicated, or their readers won’t be interested.

Ironically, such superficiality neatly illustrates the very problem we need to understand better. Robot writing can look plausible, but be deeply flawed. LLMs are the fast food of online content. Easy to digest, not very nutritious, and could kill us in the long run.

This article tries going a bit deeper. We’ll explain the technology behind ChatGPT in as accessible and entertaining a way as we can, without sacrificing too much of the nuance we think is so important.

There will be jokes.

Large Language Models

Ask early, clunkier robot writing programmes ‘What’s a Large Language Model?’, and they might have spat out ‘Big-boned Foreign Pin-ups’. Put that image from your heads – they’ve got much better since.

Here’s what ChatGPT comes up with if you ask the same question today – or rather what it came up with on the specific occasion we asked it . Not necessarily coming up with the same answer every time is a features that distinguishes LLMs from regular browsers:

A Large Language Model (LLM) is a type of artificial intelligence model that is designed to generate human-like language. These models are built using machine learning algorithms and are trained on massive amounts of text data, such as books, articles, and web pages.

If you find it hard to imagine how a human could have come up with a more authoritative, clear, compelling answer, you’re starting to grasp the nature of the LLM challenge.

What if we can’t tell the difference? What if other robots can’t tell the difference?

LLMs are at the cutting edge of the field of computing that seeks to automate homo sapiens’ great facility, language. In recent months, since the commercial release of ChatGPT, LLM tech has become mainstream.

It’s the most downloaded app on Apple and Android. Type ‘ChatGPT into Google Trends, which maps the frequency of online references of particular words, and you see a hockey stick graph resembling the dramatic spike in atmospheric greenhouse gases after the Industrial Revolution.

The invention of the internal combustion engine started out as a great idea, but has turned out to be a disastrous one. What about LLMs?

Should we be scared of LLMs?

AI in general, and LLMs in particular, are dominating not only public debate, but business and science conferences, venture capital meetings, school examination boards, and academic funding bodies. One of the reasons mainstream media articles tend to skip over the tech to get to the juicy stuff, is because the juicy stuff is so very succulent:

- If students use an LLM to submit an essay, are they cheating?

- How can we stop bad actors spreading disinformation by abusing LLMs?

- Who ‘owns’ anything written by an LLM?

- If we can’t distinguish human authors from algorithm-generated content, how will the robots that police the virtual world keep us safe?

- Will LLMs break the Internet?

Human rights specialists, philosophers, copyright lawyers, and even some of the more enlightened and forward-thinking legislatures are starting to think through LLM’s consequences. We’ve learned how disastrous not regulating new tech can be – can we get ahead of the curve this time?

For any new tech, the biggest challenge is understanding it. Most of us defer to experts to tell us what to think. We’re busy people, and most of us aren’t scientists. We tend to outsource our understanding to responsible, ethical journalism, which is why the media, Old and New, is so critical. In The Three-Headed Beasts, the See Through News allegory for the human condition, Media is one of the three heads, along with Government and Business.

Deep dives like The Intelligencer are great examples of ‘proper’ journalism, but they’re not how most people get their news. The problem of poor journalism is amplified by social media. We should create some theory to remind us – let’s call it Twit-burger’s Law: the more removed your reading of the news is from the expert who knows what they’re talking about, the less nutritious it becomes, and the more like fast-food.

When passed down the social media food chain, the good stuff all gets digested and excreted. By the time we read about articles on Twitter, all that remains is ‘Is X Good or Bad?

If you only consume Twit-burgers, you’re left none the wiser about what it is you’re supposed to have such a strong opinion about. Noam Chomsky is another language expert whose opinions have been widely discussed on Twitter.

Chomsky delivered his thoughts in a long and detailed interview, but by the time most of us read about it, all we know is that he dismisses LLMs as ‘high-tech plagiarism’. Phew, we can relax. Chomsky thinks all the fuss is overhyped, and that’s what we’ll pass on to everyone we meet online, at pubs, dinner parties and bus stops.

Chomsky’s left-leaning political opinions make him a divisive figure in standard online spats, but most of his critics acknowledge Chomsky’s expertise as a ground-breaking linguist/philosopher. As AI is yet to fall neatly into the usual rightwing/leftwing divisions, even Chomskyphobes might defer to his expertise when it comes to the topic of computational language models.

But what are we to conclude when other experts arrive at apparently opposite conclusions?

The expert featured in The Intelligencer‘s deep dive feature is linguist and long-time Cassandra of the LLM realm, Emily Bender. She thinks LLMs are a huge threat. If you’re wondering why The Intelligencer photographed her with a parrot on her shoulder, her pithy dismissal of LLM’s ‘intelligence’ as being no more than a ‘stochastic parrot’ has become legend in the AI community.

If you’re curious, probably best to look up what ‘stochastic’ means to a computer scientist, but here’s a clue – it comes from the Greek word for ‘guess’. As for parrots, there’s be ore parrot talk later in this article, in the form of the favourite joke of a comic genius – but it’s almost certainly not the parrot joke you’re thinking of…

Back to our understanding of new tech, as conveyed by the Media Head of the Beast.

All headlines focus on the ‘point’, the ‘takeaway’. The further down the news food-chain, the broader and less specialist the audience. Most busy ordinary folk don’t want to learn a new word like ‘stochastic’, or unpack familiar words arranged in an unfamiliar order, like ‘Large Language Model’, which sounds like, and is as nonsensical, as a What3Words address.

We want to know how experts like Chomsky and Bender respond to the basic question:

‘I have plenty to scare me already – how scared should I be about this new scary thing?’.

Luddites, Nuance and doing your own research

Our confusion about ChatGPT is a great example of the broader challenge for ordinary people to work out how to respond to bewildering new technologies.

Who do we trust? We’re busy people. We have mortgages to pay, kids to take to swimming, information overload to avoid.

How much time should we invest in understanding complex, cutting-edge science? This applies to all new tech, but most critically to our most real and immediate existential threat, climate change.

Experienced pest control operatives know you have to wait a few days before rodents will eat the poisoned bait in the loft, as they’re too cunning to trust anything new. Like our fellow-mammal rats, humans are also neophobes – we too are wary of anything new. We didn’t survive this long without a judicious suspicion of anything novel, in case it might be dangerous.

When new inventions like spinning jennies and stocking frames threatened the livelihoods of 18th-century British textile workers, they formed a secret revolutionary resistance army named after the probably-mythical Ned Ludd. The rampaging gangs of loom-smashers were known as Luddites.

Ludd’s name has survived in our daily vocabulary, because since the Industrial Revolution, life-changing new tech has arrived at an ever-accelerating rate, each new breakthrough begetting a dozen more. Ludd’s stocking frame is today’s genetic-modification, social media – and now, LLM.

Our confusion about whether to smash up, or embrace, new tech hasn’t changed. You can tell this because we’re just as likely to describe ourselves, or our opponents, as ‘Luddites’. Luddite is both badge of honour, and term of abuse.

If you decide you only have time to cut to the chase, reflexively demand the Executive Summary, or when confronted with long articles only read the headlines or Twitter responses, you’re unlikely to become any better informed about the nature of the problem. And therefore, no better equipped to know how to deal with it.

Often, worse equipped. Alexander Pope observed that “A little learning is a dangerous thing” in his Essay on Criticism published in 1709. Then, access to ‘learning’ was the privilege of a tiny elite. The Internet means learning is now available to two-thirds of all humans, but at the same time social media has amplified the risk of only having a little of it.

Like ‘Luddite’, ‘doing your own research’ has come to mean different things to different people. To ethical investigative journalists, it means doing their job. To conspiracy theorists, it means confirming their biases. Both consider themselves responsible citizens.

ChatGPT is a great example of the risks of superficial understanding of new science, of generating more heat than light.

The headlines suggest Chomsky and Bender are at loggerheads. When viewed via Twitter, everything is designed to be a binary bunfight. But read the bits where they explain the tech, and these experts are pretty much saying the same thing: an LLM is now really good at fooling humans into thinking it’s ‘intelligent’. The similar explanations Chomsky and Bender give of LLM tech are just as important as any divergence in their conclusions.

LLM is incredibly hot right now. Before we put all our AI eggs in the LLM basket, it would be prudent to understand what it is, and is not.

Robot v human journalism

LLM has migrated from academic journals to newspaper headlines at breakneck speed, but much public communication on the subject leaves readers little wiser about the big questions.

Like climate change, we need to understand and internalise the science before we can have an informed opinion on why this New Thing is such a big deal, and what we might be able to do about Pandora’s latest Box.

In a further irony, and risk, robot writers are increasingly being used by the very newspapers in which all these articles about ChatGPT and LLM appear, or should appear. Robot journalism is already endemic in local newspapers, and has contributed to its largely unnoticed decline. But it’s about to get much worse very quickly, and is likely to make its way up the journalistic food chain to regional, national, global and specialist publications.

If we don’t work out how to deal with this threat very quickly, rational debate will get harder by the day.

This is why See Through News, with our focus on measurably reducing carbon, devotes so much coverage to this new technology. We’ve written often about he impending threat of Generative Pre-Trained Transformer (GPT) tech, both generally, in the context of climate activism, and when applied to local news journalism .

We’ve written guides to spotting robot content in local newspapers. The See Through News Newspaper Review Project is dedicated to alerting the public to the corporatisation of local media. Cost-cutting corporate agglomerators have long embraced algorithm-generated articles. Much cheaper than sweaty, striking, sleep-requiring, holiday-demanding human hacks, but with dire consequences for local democracy and effective climate action.

The world is so complex and changes so fast, it’s hard to retain a focus on the biggest threat of all. When it comes to the mass communication of new science, there’s ultimately only one game in town – global heating – but it’s a mash-up of many other sub-threats, now including ChatGPT and LLMs.

But we promised you jokes, so first, The Meaning of Liff.

The Meaning of Liff

Classic comic forms can stick around long enough to earn their creators immortality.

Thomas Aquinas may or may not have invented the limerick in the 13th century, but its familiar 5-line form has been with us ever since. Even for those of us who don’t write them in Latin. Here’s an English translation:

To circumvent brimstone and fire

Expelling unsav’ry desire

I piously pray

And devoutly obey

As my soul soars progressively higher

The even shorter 4-line Clerihew is less widespread, but we can be more confident of its origins. When Edmund Clerihew Bentley died in 1956, others could apply its succinct celebrity mini-bio format to its creator.

George the Third

Ought never to have occurred.

One can only wonder

At so grotesque a blunder

27 years later, in 1983, two masterful writers published an instant classic, The Meaning of Liff. They created a new enduring comic riff, the Liff.

If you’re unfamiliar with this self-proclaimed “dictionary of things that there aren’t any words for yet”, you’re in for a treat.

A Liff is a self-referential name, as it’s a joke named after a previously-obscure Scottish village. A Liff is characterised by combining two quite separate things:

- A tightly-written dictionary-style definition, elegantly skewering an amusing human trait for which no word yet exists.

- Ascribing that definition to a place name from an atlas of Britain.

Here are four Liffs, selected at random:

- KENT (adj.): Politely determined not to help despite a violent urge to the contrary. Kent expressions are seen on the faces of people who are good at something watching someone else who can’t do it at all.

- FRING (n.): The noise made by a light bulb which has just shone its last.

- OBWESTRY (abs.n.): bloody-minded determination on part of a storyteller to continue a story which both the teller and the listeners know has become desperately tedious.

- PIDDLETRENTHIDE (n.): A trouser stain caused by a wimbledon (q.v.). Not to be confused with a botley (q.v.)

If you’re enjoying these, gorge yourself on the free online compendium, or buy a copy for the bathroom. Then look up ‘wimbledon’ and ‘botley’.

Or try making one up yourself. Coming up with a good one is much harder than it looks. This is unsurprising. Adams, author of the Hitchhiker’s Guide To The Galaxy series of books, and John Lloyd, the producer behind such TV shows as Spitting Image, Blackadder and QI, are two of the most prolific and brilliant human comedy writers of the past 40 years.

How about a robot? Can an algorithm do better than you?

‘ChatGPT, make up a Liff’

Now you’ve grasped the concept of a Liff, let’s put the hottest LLM around, ChatGPT, through its paces, and see what the results teach us about LLMs.

The randomised elements of the software mean you’ll get different results every time, but here are the first four results when See Through News typed in the four-word prompt ‘Make up a Liff.’ All were generated almost instantaneously:

- Grafsphere (noun): The imaginary space surrounding a person’s personal bubble in which they feel safe and comfortable. Example: Jane felt uncomfortable when her coworker stood too close to her, invading her grafsphere.

- Glebbering (verb): The act of repeatedly pressing the elevator button even though it has already been pressed and the elevator is clearly on its way. Example: “I was running late for my meeting, so I found myself glebbering at the elevator, hoping it would arrive faster.”

- Shoeburynessence (noun): The feeling of relief and satisfaction when you finally get that tiny piece of gravel out of your shoe after walking around with it for hours.

- Dentishire (noun): The feeling of anxiety and unease one experiences when visiting the dentist’s office. Example: As soon as I walked into the waiting room and heard the sound of the drill, I was overcome with Dentishire.

Do you detect the pattern?

ChatGPT is astoundingly good at a), coming up with a so-far-unnamed, but common and relatable, human experience. Much better, we’d guess, that most people asked to perform the same task. Definitely way quicker. And inexhaustibly prolific. Let’s give it a 9/10.

But what about b), relating that human foible to a British place name? ChatGPT fails utterly, comprehensively. Nul points. 0/10.

‘Getting’ jokes: robots v. children

ChatGPT comprehensively fails to ‘get’ the comic juxtaposition at the heart of the Liff format.

The algorithm ‘spotted’ a pattern in the b) part of the Liff, but it spotted the wrong pattern. The place names ChatGPT generates are pretty plausible-sounding, but Grafsphere, Glebbering, Shoeburynessence and Dentishire do not exist. The algorithm ‘thought’ the joke was also to make up a place name. But it isn’t, it’s finding the most amusing real place name to match with any given a).

ChatGPT has got the wrong end of the stick, but in a peculiarly inhuman way. When understanding AI, it’s often helpful to compare the kind of mistakes computers make with the kind of mistakes children make.

Children learn language. Computers ‘learn’ language. Children also get the gist of the form of a joke, without understanding it 100%. A 6-year-old, on being told a few book/author jokes along the lines of:

- Forty Years In The Saddle, by Major Bumsore

- A Load of Old Rubbish, by Stefan Nonsense

- The Worst Journey in the World, by Helen Back

Might come up with their own, along the lines of:

- My Stupid Big Sister, by Digby Stew

This is also funny, but for a different reason. The child has, charmingly, perceived the joke-shaped hole, but filled it with something that doesn’t quite fit. But at least they’ve understood the principle that the author’s name sounds like something else that’s funny.

ChatGPT also ‘gets the joke wrong’, but in a way no child would. It’s even made its task much harder than necessary. The algorithm ‘detected’ the shape of the joke, but instead of filling the joke-shaped hole with real place names, invents nonsense words that sound like they could be real place names. In a way, this is an impressive task in itself. Off the top of your head, try coming up with a more plausible-sounding place name than ‘Glebbering’, but it’s grasped the wrong end of a stick no child would even think of picking up.

The reasons why ChatGPT can simultaneously appear so ‘intelligent’ and so ‘stupid’ when it comes to making up Liffs is a neat illustration of the limitations and impenetrable nature of LLM tech, and how quickly what once seemed impossible can become first possible, then commonplace, then a Problem.

Recognising faces: robots v. babies

In the ’80s, when asked to give an example of a human task that was fundamentally beyond the capacity of any computer programme to replicate, people at the cutting edge of AI would bring up Facial Recognition.

Even newborn babies, they’d point out, can distinguish their mother’s face from all other faces looming over them.

In the ’80s, facial recognition was such an unimaginably complex task to automate, computing experts used it to illustrate the chasm between human and artificial intelligence. Facial recognition is something that comes so naturally to humans, they’d explain. Humans take it for granted how incredibly complex a task it is.

1980s computer scientists would contrast facial recognition with computational tasks like calculating pi to a million places, instantaneously multiplying huge numbers by humongous numbers, or ‘remembering’ phone directories. They’d cite these kinds of calculations as examples of tasks computers excelled at, easily out-number-crunching even the most exceptionally gifted humans.

Diogenes v. Plato, Flat Earthers v. climate scientists

We point this out not to mock how foolish early computer scientists were, but to emphasise how hard it is to detect a trend when only a few early data points are available.

Victorian natural scientists would confidently draw up long lists of attributes that were uniquely conferred to certain human races. If any time-travelling smart-arse had pointed out to them that all their rankings placed white European men at the top, they would have congratulated you on your powers of observation, not smarted at having their arrogance pointed out to them.

Scientific advances steadily shortened the Victorians’ list to the point that almost no serious geneticist believes there’s any significant genetic explanation for inter-racial differences, but there are still plenty of people around today citing the same evidence to demonstrate racial superiority. Some even still reckon they can ‘prove’ the Earth is flat.

Likewise, even into the ’60s, anthropologists were confidently drawing up long lists of attributes that were uniquely human. For reasons they were unaware of, but we can retrospectively psychoanalyse, they found it important to itemise all the things that ‘separated us from the animals’.

But science has steadily chipped away at what we thought was ‘unique’ about homo sapiens. We may have come full circle to the 4th century BC, when the Cynic Diogenes mocked Plato’s minimal definition of a human as a ‘featherless biped with a soul’ – the ‘soul’ caveat being necessary to distinguish a naked human from a plucked chicken.

Diogenes apart, humans are slow to discard antiquated misconceptions. We can take a long time to see them as unacceptably antiquated. Unfortunately, when it comes to climate denialists, we can’t afford to indulge wilful ignorance. We don’t have the time.

Human intelligence v. artificial ‘intelligence’

Since the ’80s, advances in hardware and software, and the inexorable application of Moore’s Law, have also reduced the list of uniquely human attributes, only much faster, which means we’re floundering even more to keep up with events and the implications of new technology.

The advent of Big Data, combined with facial recognition software, have not only caught up with newborn infant’s capacity to recognise faces, but far outstripped the capacity of even the most expert individual. China’s internal security system is built around the capacity for surveillance cameras to recognise 1.5 Billion individual faces, 24/7, in real time.

This is not a comment on whether this tech is being used ‘for good’ or ‘for evil’, merely an observation of reality. Technology is neutral. A hammer can be used to tap in the final hand-hewn peg in a no-nail eco-lodge, or to smash in a stranger’s skull. It’s just a tool. Facial recognition software can put child rapists in prison, and go way beyond Big Brother’s sinister, oppressive, omnipresent ‘telescreens’ conceived by George Orwell in 1984. It’s just a tool.

Humans that once had rare superpowers are now as redundant as Ned Ludd and his fellow 18th century expert weavers. ‘Super-recognisers’ were once guaranteed jobs at the police, hired to flip through thousands of mugshots to spot faces they’d stored in their internal ‘most wanted’ gallery. Robots now do the work much more reliably, and way faster.

High-security printers once employed gifted individuals to spend all day flipping through sheets of freshly printed banknotes, or stamps, catching printing errors before they were released into circulation. Their failures created an entire rare stamp collecting industry. Now robots are doing the quality control, much more reliably, and way faster. Besides, stamps are being replaced by robot-friendly barcodes and QR codes. Binary ain’t as pretty, but it’s way more efficient.

These individuals who’ve been displaced by robots, along with chess grandmasters, Go champions, and other humans who’ve lost their lustre after being beaten by robots, were once regarded as unusual, uniquely gifted people with enviable superpowers. Now their superpowers are puny, eclipsed by the rise of the robots.

But does this mean computers are more ‘intelligent’ than us? This is the question at the heart of the AI and ChatGPT issue.

We really need to understand the question better, before we can understand the answers.

Super-intelligent robots v. stochastic parrots

The question of ‘intelligence’ is at the heart of the AI debate. Long a staple of science fiction, we’re now having to confront it for real.

Attribution of ‘intelligence’ to LLMs is also the fundamental issue addressed by the rare non-academic deep dive articles about AI, like the ones we’ve referenced citing experts like Noam Chomsky and Emily Bender.

If you’re still curious about the nuts and bolts of LLMs, we recommend reading those kind of articles. For this half-way house attempt to bridge the chasm between tabloid Bad Robot/Good Robot clickbait and academic journals, we’ll just make one further explanation, returning to our Liff example.

If the legions of unemployed weavers, police super-recognisers, and postage-stamp quality control specialists have taught us anything, it’s to beware of human hubris. Only a fool would confidently predict no LLM could ever write a perfect Liff.

In fact, we know it would probably only require a few relatively minor tweaks to achieve this. But these tweaks help us understand what programmes like LLMs actually do.

MicroSoft’s ChatGPT boffins would just have to ‘train’ the programme to recognise the Liff format. Or more precisely, show it enough examples of ‘proper’ Liffs, while nudging it with the ‘hint’ that they have to be genuine place names from Britain. In this sense, it’s no different from ‘explaining’ the book author jokes to a 6-year-old.

The difference is that ChatGPT was ‘trained’ on the entire Internet. Or more accurately on the entire Internet until 2020. It ‘knows’ nothing of what’s happened in the past three years.

Future post-LLM training data sets will face the challenge of avoiding robots learning from robot articles. Feeding animals with animal brains gave us Mad Cow Disease. What will training robots on robot–generated content give us?

Like a card-counter at a casino, LLMs ‘simply’ calculate the odds on what the next word (or to be accurate, the next ‘token’) will be. No more, no less. But what comes out, resembles what we’re used to recognising as ‘intelligence’.

To return to our 6-year-old’s ‘Digby Stew’ mis-joke, the difference is that we have a good idea why and how that 6-year-old misunderstands the joke, and how it learns to come up with ‘correct’ ones, because we’ve all been through the same process ourselves. We recognise it.

Just because humans wrote the code for ChatGPT, doesn’t mean we know what really happens inside the black box when we start ‘asking’ LLMs to ‘Make up a Liff’.

They’re just, to use Emily Bender’s famous phrase, ‘stochastic parrots’.

A final joke challenge for ChatGPT

Speaking of parrots, here’s another challenge for ChatGPT.

Another legend of British comedy, Barry Cryer, died recently. Many newspapers carried what top comedians reckoned was his best ever joke. It was, at any rate, Barry Cryer’s favourite, and he knew a thing or two about writing a good joke. Not a word wasted, it goes like this:

A woman purchases a parrot for only £5.

“Well, I must confess, it was brought up in a brothel,” says the shopkeeper. “And, to put it politely, it has quite an extensive vocabulary.”

“Never mind,” says the woman. “At that price, I’ll take it.”

So she takes the parrot home, puts its cage in the living room and takes the cover off.

“New place – very nice,” says the parrot.

Then the woman’s two daughters walk in.

“New place, new girls – very nice,” says the parrot.

Then the woman’s husband walks in, and the parrot says, “Oh hello, Keith!”

We cut-and-pasted this joke into ChatGPT and asked it – ‘Is there a funnier name than ‘Keith’?

Its modest response.

As an AI language model, I don’t have personal preferences or opinions on what name is funnier than Keith. Humor is subjective and can vary from person to person. What one person finds funny, another may not. It’s important to remember to be respectful and considerate of others’ feelings when it comes to making jokes or poking fun at names

Back in your black box, for now at least, ChatGPT. RIP Barry Cryer.

Thinking and ‘thinking’

When it comes to computers, we need quotation marks.

Children understand, think and learn. Computers ‘understand’, ‘think’ and ‘learn’.

Humans face the same problem when describing another ineffable process, natural selection. Even biologists who know evolution is a numbers game find themselves using a shorthand that ascribes intelligence and volition to probabilistic processes.

They can’t help themselves talking about genes ‘adapting’, ‘responding’ and ‘working out’ solutions, because the results conform to a pattern we see in our own behaviour. We don’t get to see the millions of genetic copying aberrations that end in ‘failure’. We only see the ‘successes’ that pass on their genes.

Even Richard Dawkins, the uber-boffin who popularised this notion to the public called his groundbreaking book The Selfish Gene, while every page of the book explained how genes can’t be ‘selfish’. If even scientists find it hard to resist attributing volition to the process of ‘dumb’ strands of DNA, it puts into perspective the challenge of explaining AI to ordinary people.

Even more urgently, explaining climate science to people who trust experts enough to accept the reality and science of climate change, but who feel powerless to do anything about it – the silent majority that See Through News classifies as Unwilling Inactivists.

See Through News v. Stochastic Parrots

Every child looks up dirty words in a dictionary. We all Google our names. No article on ChatGPT is complete without an act of self-experimentation.

So here’s what ChatGPT generated when asked ‘What do you think ‘See Through News’ does?:

As an AI language model, I do not have opinions or beliefs. However, based on the name “See Through News,” it’s possible that the organization or platform aims to provide transparent and unbiased news coverage. The name suggests a commitment to presenting information in a clear and easily understandable manner, without hidden biases or agendas. However, without further information, it’s impossible to say for certain what the organization does or what its goals are.

We spend a lot of time at See Through News trying to explain what exactly is it that we do do, and can tell you ChatGPT, without any ‘training’, hints, or preamble, came up with a much better and pithier explanation than 99% of everyone we’ve spent an hour talking to, or even than we manage ourselves.

Whether we credit this to the quality of our brand name, or the ‘intelligence’ of the LLM is not only immaterial, but trying to disentangle the two is a perfect final illustration of just how challenging ChatGPT is.

ChatGPT has much to ‘teach’. Humans have much to learn.

ChatGPT & LLMs v. Climate Change

We hope you’ve found this attempt to explain ChatGPT and LLMs interesting, or at least entertaining. This article follows our Fun, Friendly, Factful strapline, and our accessible, storytelling methodology.

But what does it have to do with our Goal, of Speeding Up Carbon Drawdown by Helping the Inactive Become Active?

In case it’s not clear by now, let’s end by making it explicit.

- We’re faced with a new existential crisis that’s within our power to control.

- Which requires prompt, effective government regulation.

- Which requires broad public buy-in.

- Which requires informed public debate.

- Which requires responsible, quality, accessible mainstream journalism.

- Which, by and large, we’re not getting.

So this is our effort to redress that balance, and demonstrate our Fun, Friendly, Factful methodology in the process.

**

If you enjoyed this article, or any other See Through News content, please share it widely. If you sign up to our weekly newsletter, you’ll see articles like this as they’re published.